iPhone 17 Pro doubles AI performance for the next wave of generative models

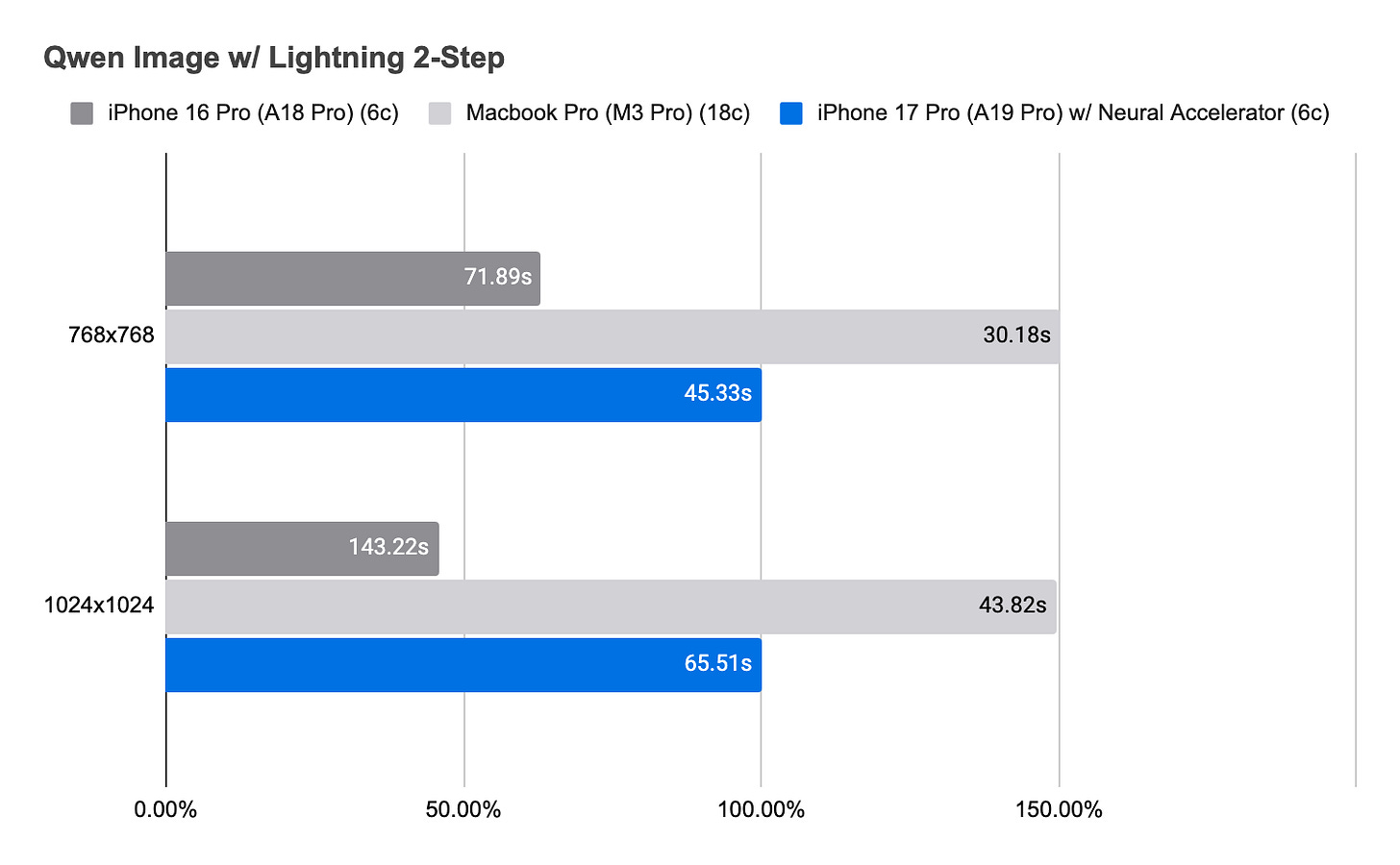

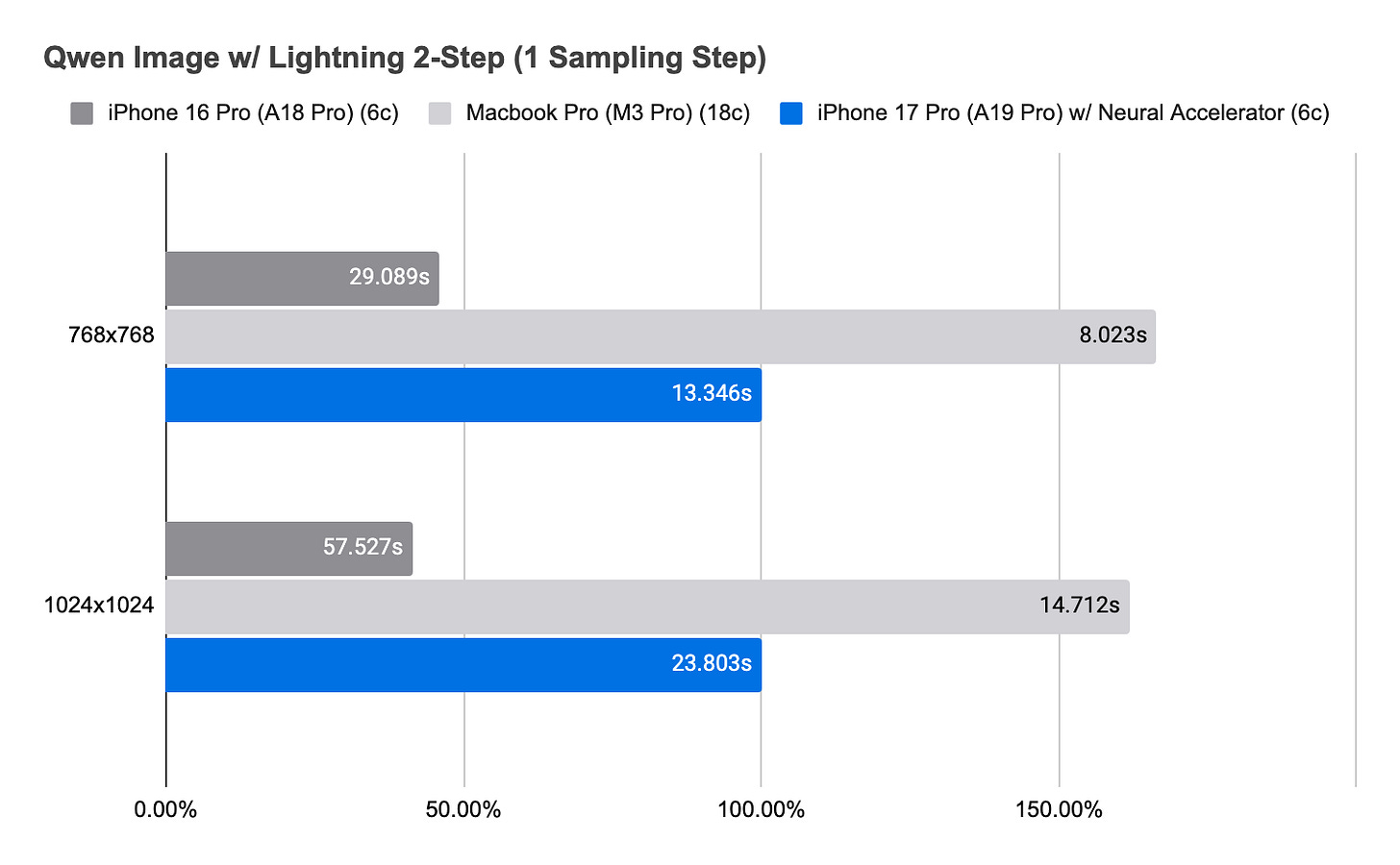

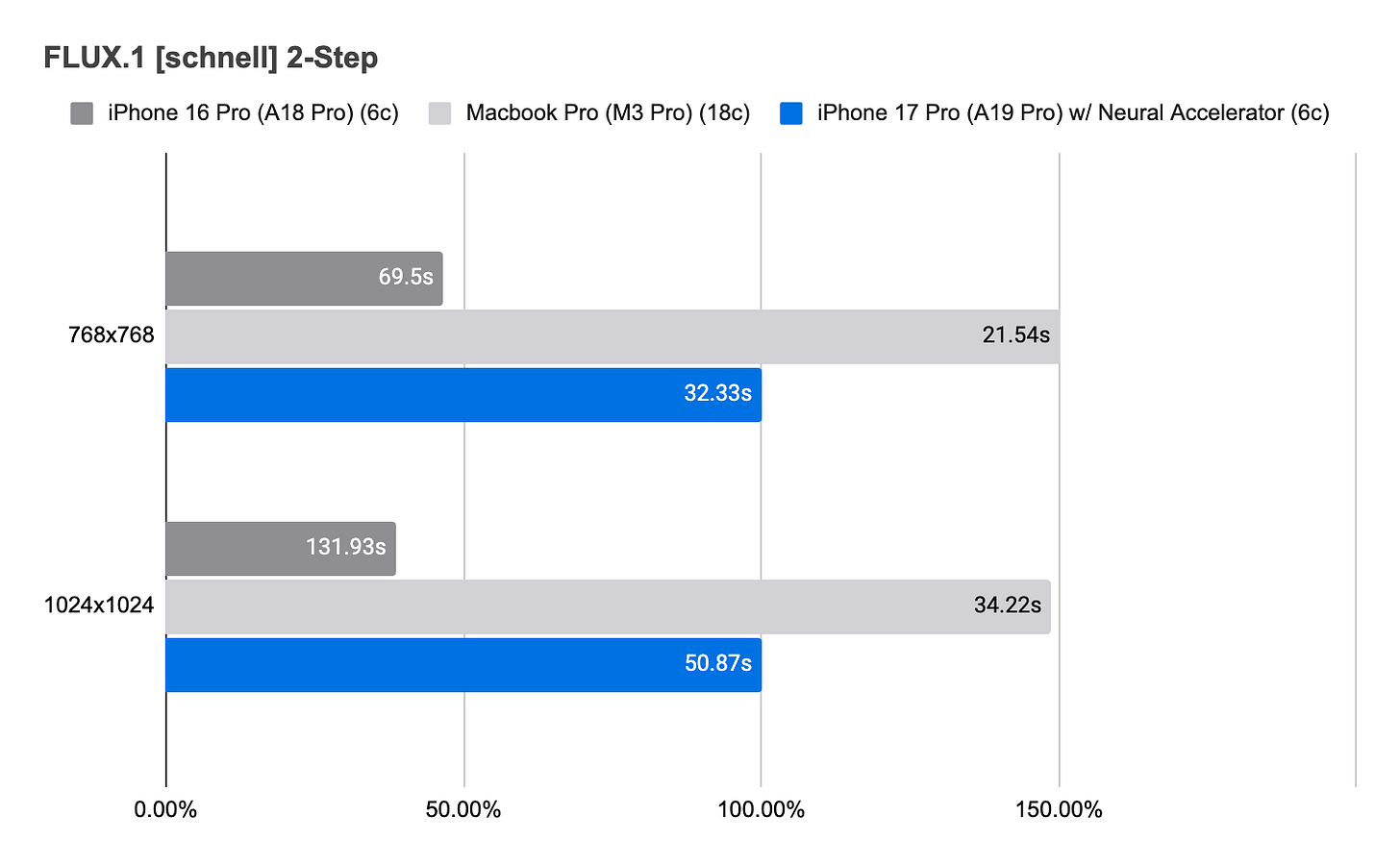

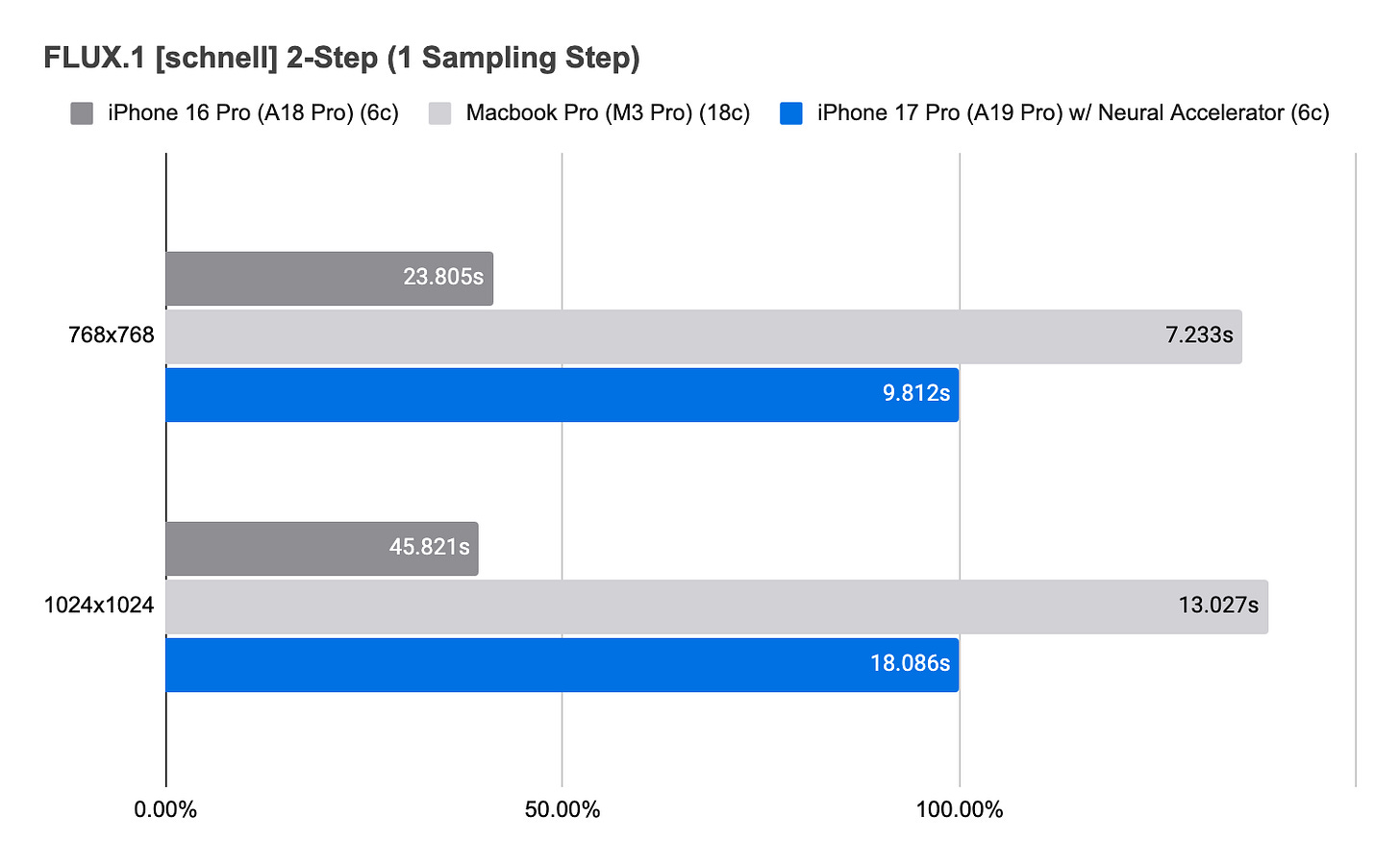

With new Neural Accelerator, the iPhone 17 Pro delivers a 2× leap in inference speed. FLUX.1 completes in under 35 seconds, while larger 20B models like Qwen Image finish just over 45—all on-device.

Apple Neural Engine has long been a cornerstone of Apple’s SoC, enabling fast and efficient on-device inference. It works well for smaller models, and Draw Things supports it with the Stable Diffusion v1.5 series at 512×512 resolution. But server-grade large-scale models—FLUX.1, Qwen Image, Hunyuan Video, Wan 2.2—the ones we often talk about with more than 10 billion parameters—are a different beast. Unfortunately, the ANE falls short when handling them.

The newly released iPhone 17 Pro features the powerful A19 Pro SoC with new GPU Neural Accelerators. Combined with other GPU improvements, it delivers exceptional performance for its size. For compute-bound tasks such as diffusion-based neural networks, Draw Things has seen a remarkable 2× generational improvement across many state-of-the-art models.

On iPhone 17 Pro, the FLUX.1 [schnell] / [dev] models at 768×768 run at ~10s per step, finishing 2-step inference in under 35 seconds. Even the latest 20B-parameter models, such as Qwen Image, run at ~13s per step, completing 2-step inference just over 45 seconds at 768×768. At larger resolutions, efficiency shines even more: 1024×1024 inference takes just over 50 seconds for the FLUX series and just around 65 seconds for the Qwen Image series—truly delivering MacBook Pro–like performance.

Draw Things remains focused on delivering the best media generation models—locally, privately, and efficiently. Stay tuned and subscribe for more exciting updates!

Notes

The iPhone 16 Pro numbers reflect typical daily usage without additional cooling (such as fans or ice packs). At a room temperature of 70°F, thermal throttling begins after about a minute, reducing performance for 1024×1024 and 1280×1280 generations. In contrast, the iPhone 17 Pro manages thermals far better, maintaining stronger sustained performance over longer runs.

What about SD3 Large 3.5 Model? Will this also gain performance with this new SOC? Which settings? App version?